The Emperor’s New Clouds

TLDR

Solutions offered by major cloud providers aren't always a good fit for startups, despite the preconceived ideas that became norm in the low-interest/easy-VC era. What's ideal for large profitable organizations might not be the best for small companies. Doing as Netflix does won't make you Netflix. A fashion victim approach to technology insources significant risk related to complexity, lock-in and cost.

El Cloudillo

The story often goes like this:

the Emperor or, in Spanish, El Cloudillo, really wants to use the cloud in his startup Empire. The initial reason was that the cloud seemed to be the new black. Then it was its infinite scalability, which matched the forecast but yet to be seen expansion of the Empire. Then it was the fact that every other emperor seemed to be using it, even if their empires weren't comparable in size. The Emperor lacks granular knowledge to distinguish between different cloud offers - he can’t tell databases from filesystems - but trusts his feelings more than the well documented opinions of DHH or Andreesen Horowitz.

The Emperor operates on the powerpoint layer of things, he loves fashion and cares to be regarded as innovative. He is often advised by a number of in-house Microservants who convince him that his cloud strategy is sound. The Empire investors are meanwhile paying the bill.

You might have met a couple of Emperors, Empires and Microservants at different points in your career, but decoding their behaviours and beliefs takes a bit of cloud history.

The cloud commoditized Linux

The most relevant contribution to a better world from cloud providers was the commoditization of Linux. This was truly innovative and groundbreaking in its day.

Running Linux on your own hardware is no drama: you need a certified hardware supplier, a co-location contract, a virtualization system and modest knowledge of RAID and monitoring. You also need some capacity planning experience. I ran projects using dedicated hardware with great stability and performance at a tiny fraction of what the corresponding cloud cost would be. But the know-how wasn't put together overnight.

Running virtual servers in the cloud allows teams to abstract from hardware, data centers, virtualization, RAID and capacity planning. You can just create as many Linux servers as you like whenever you need. You can resize them and shut them down as you please. You can take snapshots and revert to those snapshots. You don’t need hardware skills in your team.

Even if your specific project is more suited to having dedicated hardware in the medium term, the ability to run virtual servers in the cloud allows work to move in parallel during early stage development, as hardware details are being ironed out. It also allows you to run PoCs you aren't sure will have a future without committing to extra hardware.

All of that is great.

Linux is not Linux

I said that the cloud commoditized Linux. What do I mean by Linux?

Linux is not Linux. When people refer to Linux, they mean a large open source distribution built around an OS kernel called “Linux”. Thus, by making it easy to launch Ubuntu, Debian, RHEL and other virtual server types, what the cloud initially commoditized was access to large collections of open source software that remain consistent over long-term periods. The cloud made it easy to use Apache, Nginx, Python, Php, MySQL, PostgreSQL, Celery, Redis and many other packages, with security updates and support contracts from suppliers like Red Hat, Canonical or Suse.

That's obviously very good.

Linux configuration management

Having an easy way to create Linux servers or, better said, collections of open source software of various sizes led to the need for an efficient way to configure them. In particular, the ability to create small virtual servers enabled a much better isolation of server roles, the drawback being a larger number of servers to be managed.

Enter configuration management. The Linux Configuration management scene grew significantly throughout the 2010s-2020s and brought a number of different options to the table.

While configuration management is far from trivial, refined use of these tools enabled efficient management of large fleets and rational use of the cloud with minimal vendor lock-in. In fact, Linux servers are the same everywhere and configuration management tools are also installed on a Linux server. In this scenario, if the cloud provider raises prices too much, provides bad performance or poor support, moving to a different provider or to dedicated hardware is relatively easy because the modus operandi remains mostly the same.

This is obviously not good for cloud providers!

Linux configuration management is bad news for cloud providers because it fosters competition and, therefore, keeps them honest. Thus, Linux configuration management had to die for vendor lock-in to go on living.

Cloud-native components are harder than hardware

The solution to implement vendor lock-in was to “commoditize” open source components directly instead of Linux distributions. Cloud vendors started to implement load balancers, databases, message queues, container runtimes, and language-specific function runtimes (aka serverless).

While this commoditization process might seem similar to what was done for hardware, there is a subtle difference: consuming the provider’s implementation of individual components entails processes not related to development, also known as “ops”, which in this case are provider-specific. It's the “ops” that generate vendor lock-in. Cloud-native components de-uniformize the operations: AWS ops are not the same as GCP ops or Azure ops. They're similar in concept but different in practice, and that creates a very strong opposition to change. Unlike with servers, for which universal configuration management exists, there is no such thing as universal cloud configuration management.

But are ops even considered for decision-making?

Perhaps more naïve people than I thought are actually involved in computer engineering decisions. People often think that using cloud-native components eliminates the ops rather than complicates them. A powerpoint "engineer" might describe the situation along theses lines:

Oh, it's just Docker, Postgres and REDIS but running in the cloud.

In fact, this translates to either a snowflake configured by hand or a complex to maintain vendor-specific Infrastructure as Code project.

Hey, but ...

Well, actually no:

- Terraform code is different across different cloud providers

- Even within the same provider the code often needs to be patched due to cloud behavior drift

Terraform does help to avoid cloud snowflakes and, therefore, to enable disaster recovery, but it requires intensive maintenance and is not provider independent.

Specifically, Terraform doesn't provide the reproducibility level of Puppet on an LTS Linux distribution, for instance. In fact, with traditional Linux configuration management you can aim at up to 13 years of process reproducibility without drift - this gives you the power to define whichever infrastructure life cycle length makes sense for your business (3 years?, 5 years?, ...). Have a look at the RHEL and Ubuntu life cycles.

Of course, since cloud-native is a Hype Train, few people took their time to check the details before they bought a ticket. The outcome is an entire generation who doesn’t know how to run servers, databases, reverse proxies, etc., and is now stuck in the realm of cloud-native components.

This generation also forgot the meaning of load average, cpu use, I/O and how grep, tail, top, netstat, tcpdump, netcat, ssh and many other commodity Linux tools enable short feedback loops while troubleshooting systems.

They're currently busy deploying containers instrumented with prints so that they can stare at Cloudwash and figure out why requests sent to the load balancer sometimes don't reach any container. Oh, so sorry for my blunteness. I meant to say they are now practicing observability and fixing bugs at the rate of 1 per day, while blocking the entire staging environment (“we can’t have more environments because they are expensive” as we will see below).

Therefore, an entire class of problems that would take some quick greps and tcpdumps on any Linux machine - whether it had 1 or 48 vCPUs - translates to a very high number of troubleshooting hours from teams who aren't equipped with efficient tools.

The fictional but plausible team described here might just end up recommending “more containers” because “the cluster is saturated”. Or something equally vague - the absence of server experience also means a lack of granular understanding of the differences between CPU usage, I/O, latency, throughput, etc.

This might hurt sensitive readers, but running cloud-native components is the cloud equivalent to assembling hardware - at a higher price, with higher maintenance, and using less efficient tools. Which is exactly the move to the cloud meant to prevent.

Hey, but uniformizing the components by running a Kubernetes cluster solves that problem, right? Right?

...

I will let Hacker News sort out this one.

In short, cloud-native components might give you infinite scalability, which is cool if you need it. But do you really need more than a couple of virtual servers with 48 vCPUs? Or are you solving a problem you don't even have? You can get cloud servers that size from most providers, including simple to use providers such as Hetzner and Digital Ocean.

Of course, cloud-native promises zero downtime too, but that's not likely to happen. First, because if your solution uses multiple cloud-native services, a failure on a single service might bring your system down. And, second, you're likely to have downtime caused by human error due to the extra operational complexity cloud-native entails.

By the way, the amount of downtime an organization can tolerate is also an engineering trade-off.

Complex numbers

To check whether the problem of cost is imaginary or real we need to go through some complex numbers. Let's start by implementing the hello world of cloud cost estimation. Suppose we are running an early stage startup with an initial small number of customers. We might be able to run production with:

- 2 vCPUs

- 4GB of RAM

- 40GB of disk space

- 4TB of egress data

Let's think of this system as the smallest system suitable for a production setup and consider that, as the company grows, it might need 2x, 4x, 8x,... more resources.

Here are some candidate solutions for this base configuration and their corresponding costs:

| Item meeting the minimum specs | Price (USD) |

Notes |

|---|---|---|

| Hetzner VM – CX21 | 5.22 | 80G HDD, 20TB traffic, original price 4.85 EUR |

| Digital Ocean VM | 24.00 | 40G HDD, 4TB traffic |

| AWS EC2 VM – t4g.medium + 40G EBS + 4TB traffic | 27.75 | not including IOPS and throughput |

| AWS cloud-native solution | ||

| - AWS Fargate container (1 vCPU, 2G RAM) | 36.04 | |

| - AWS Aurora v2 RDS database (2 ACU, 2G RAM, 40G HDD, ? vCPUs) | 175.20 | base price with default IO rates |

| - AWS Load Balancer | 32.77 | 4TB traffic, 50 new conns/s, conns lasting 2m |

| AWS cloud-native total |

244.01 |

Shocking as it may be, we see a range of prices between ~5 USD and ~ 244 USD.

The prices for the Hetzner and Digital Ocean VMs were very easy to find - see here and here. The price for the AWS VM required a mix of these links but includes some uncertainty since there are extra charges for IOPS which aren't easy to calculate. The price for the AWS cloud-native container-based solution, which includes a database and a load balancer, was calculated using these three links and also includes considerable uncertainty again due to parameters that aren't easy to estimate. For this solution, however, a quick estimation attempt results in 10x the cost of a Digital Ocean VM and almost 50x the price of a Hetzner VM. Please note that the AWS cloud native solution, as listed on the table, does not provide extra redundancy because it uses only one container - using two for redundancy would add 36.04 to the monthly bill, raising the total to ~ 280 USD. Now we have to consider that several environments will be necessary and that their size will grow over time not only due to more customers but also due to the growth of development and testing activity.

Extending this exercise to GCE is left as an exercise for the reader.

The next question is: which of these solutions should a startup implement in its early stage? Can startups afford the luxury of scaling while running cloud-native?

Probably not, unless they use contortionism.

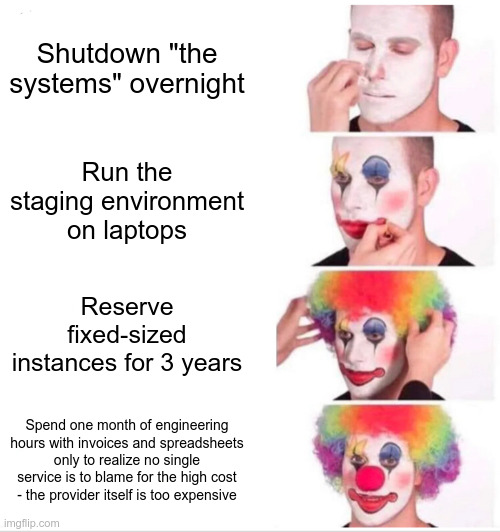

The FinOps circus

Welcome to the FinOps circus. Showing epidemic growth since 2018, this F-word provides you a couple of ways to make the cloud bill more affordable by defeating the very purpose of going to the cloud.

It might sound like a joke but it isn't. Cloud pricing, especially from large providers and particularly for native components, is designed in such a way that complexity is hard to manage from the customer perspective. It's an asymmetric fight between the designers of complexity and those trying to understand it. This fight can be fought, but the customer can't win. Best case scenario, mitigation of gross resource misallocations can be achieved. Or how about spending some more by contracting FinOps consultants?

This is why it is tempting to go for the cheap shots suggested in the picture above, but even for those devil is in the details.

Let's see: if your business only operates on business hours, you could consider shutting down "the systems" during the night. Otherwise, you could shutdown staging or testing systems. Do you assign this job to a specific person, say Mr Smith? Does Mr Smith need to manually sync with the whole team to ensure that they're not using the environment? What if they are? What if they don't reply? How long should he wait? Can Mr Smith go on holidays? Alternatively, should everybody in the team have permissions to bring down/up the infrastructure? Or should you simply ban work after 6pm even, if it jeopardizes any extra work which might prove crucial for the company?

Furthermore, how do you shutdown "the systems" overnight if they're based in cloud-native components? You'd need to delete the components and create them again in the morning. If there are databases, you'd need backup before destroying and restoring from backups in the morning. Same for storage buckets or network filesystems. You simply can't turn them off like a server. How does it actually get done? Certainly not with clicks in the UI. You'd need a reliable IaC project wrapped by scripts that perform backup/restore activities. But if you execute this every day, how often will cloud behaviour drift make the re-creation of the environment fail and trigger emergency Terraform maintenance rather than allow planned work to move forward in the morning?

Thinking that you can reproduce a full cloud-native staging environment on your laptop is yet another theoretical idea that won't survive reality. It's never quite the same and a single difference in behaviour will have you break production, jeopardizing that zero downtime somebody sold you on.

As for reserving fixed size instances for 3 years, in case you use large expensive cloud servers,... there goes the flexibility and elasticity the cloud was supposed to bring.

Cloud scale anxiety

OK, let's forget all the cheap shots...

Of course! What took us so long? Autoscaling is the Final Boss of the FinOps game. Native container runtimes implement autoscaling of the number of running containers whereas cloud-native databases implement autoscaling of the underlying server size (CPU and Memory). This looks compelling in powerpoint presentations.

Expectations: the system is small by default and grows on-demand when necessary, so we pay extra strictly when necessary.

Reality: this only works in ideal conditions when demand shows a smooth, well understood, slow varying, periodic behaviour.

Challenges:

- you have to define demand

- you have to identify your bottlenecks

- you have to profile your system to understand how large it should be as a function of demand

- you might need a custom metric for defining demand if the bottleneck is not clearly CPU or Memory

Risks:

- scaling takes time and metric updates also take time

- it might be that the system takes too long to scale-out and some customer requests are not served

- it might be that the system takes too long to scale-in and more than the expected amount will be charged

- scale-in events might kill containers that are still serving requests (example here)

- overuse from a third party might prevent scale-in events from happening, leading to an astronomical bill

Some of the risks and challenges are described in more detail in this article.

So, do you need to increase the number of containers, the database or both? Which metric should trigger autoscaling? Which limits should be set up?

Getting autoscaling right requires many hours of engineering unless there's a trivial demand pattern (day vs night, X last days of the month, etc). Even in those cases a sequence of scheduled changes between fixed sizes might be less prone to surprises. But note that proper alerts need to be put in place to check for failed scaling changes: a failed scale-out request might leave your system very slow whereas a failed scale-in request might leave your system very expensive.

Cloud scale anxiety: this is what you might be feeling and passing on to your teams. You want your system to be performant, but can't afford to hold it stable at the necessary size. Dealing with the complexity of frequent scale changes will likely result in the enshittification of your ops.

Here's something cooler than autoscaling: the ability to afford a large system that is designed to sustain the predicted demand for a reasonable number of years so that the team can focus on development rather than on mitigation of complex autoscaling issues. Buy or rent some large servers from a cost effective provider, breathe deeply and let your worries go.

So cloud-native components definitely and universally suck?

Not universally, they don't.

The price is high. The operation is labour intensive, vendor-specific and prone to drifts. And there is also the IAM topic. Often, native monitoring tools are not efficient and more money needs to be spent elsewhere.

But high cost and operational burden can be dealt with money, larger ops teams and slower progress. Furthermore, granular permissions, diversity of services, or easy scalability are valid business requirements. They're more likely to be found in large organizations, though, and justify the use of cloud-native components from large providers.

Easy scaling features have real value, even if they come at a very high cost. You're able to change the effective size of a system, either a container runtime or a database, in a controlled way and without any interruption, which means you can adapt to market demand changes in the natural timescale of market changes. This is neither autoscaling neither a cost saving measure - it's a flexibility feature of an expensive system which, if carefully used, won't add operational complexity.

Essentially, what we need to accept is that the economics of large organizations is different from that of small businesses. The money is different, the trade-offs are different, and the leverage over the market is also different. A large organization with bureacracy issues onboarding suppliers might consider that a 10 year vendor lock-in is acceptable compared to dealing with multiple smaller providers or multiple ways of doing things (on prem + cloud, for example). Would this make sense for an ambitious startup?

A large organization with talent-retention difficulties might prefer to adopt the current market trend rather than the most efficient approach. A large organization might allocate 3x more time to delivering a project than a small, specialized company would. In general, the inefficiences of large, well established organizations, some of which are unavoidable at that scale, are much better tolerated by the market than those of a startup under competitive pressure.

Thus, we should be careful about generalizations and mindful of the context.

Down with the Emperors, context is King

Hype tech FOMO comes at a price. Context-dependency is a thing!

A company that needs to continuously use large servers for intensive data processing will over the years be better off buying or renting dedicated hardware and assuming the small burden of server life cycle management. A company faced with financial uncertainty should avoid provider-specific dependencies as much as possible. A company that needs to manage a large number of small servers is likely a good fit for the Hetzner Cloud offer, given the cost efficiency it allows for. A company that needs a large variety of services, security-certified providers, and granular permissions might be better off with AWS, knowing that it will need sizable ops teams to deal with the platform's complexity. And in many cases a hybrid strategy makes sense and provides sensible risk balancing.

Engineering is not ideology - it requires a heavy dose of context awareness. But somehow context awareness doesn't make it to fancy startup pitches. On the slide deck the story must be simple even if reality is complex. So there you go: infinite scalability, zero downtime, elastic bla bla bla. And an unsustainable bill held in place by vendor lock-in, a bill you can't beat with FinOps.

The thing with Emperors and Cloudillos is that they either ignore how important context is or they completely misunderstand their own context. Either way, it's an expensive lesson for them to learn.

Further reading

- The Cost of Cloud, a Trillion Dollar Paradox

- The many lies about reducing complexity

- We have left the cloud

- Don't be fooled by serverless

- The cloud fugitive

- Uptime Guarantees — A Pragmatic Perspective

- Simplicity is An Advantage but Sadly Complexity Sells Better

- Does it scale? Who cares!

- That won't scale! Or present cost vs. future value